Artificial Intelligence

Generalization to Specialization – Domain Adaptation of Large Language Models

Large language models have revolutionized the field of natural language processing, enabling machines to comprehend and generate human-like language. However, these models are primarily trained on general, colloquial language. Due to this, their understanding of specific domains such as legal or tax is often limited. This limits their ability to comprehend and generate specialized or technical language which poses a significant challenge.

Insights

- Finetuning these models with domain-specific datasets can help them learn the technical language and terminology of the field, thereby improving their performance.

- However, for very large models (e.g., in the range of 175 billion parameters) and closed-source models, this process presents challenges, including availability of computational resources and risk of catastrophic forgetting.

- Additionally, closed-source models do not provide flexibility in finetuning approach, which further adds to this challenge.

With recent release of various open-source models, which are available in various sizes, domain adaptation of large ML models has become more accessible and easier to implement. These models can be finetuned with domain-specific datasets, enabling them to understand and generate technical language and terminology with greater accuracy. In this paper, we present different techniques of finetuning open-source models like LLaMA (7 billion) on domain-specific dataset, which contain text from a particular industry or subject. It also addresses how we have overcome various challenges and presents our results. We will also present a comparative study of different models on legal dataset and then showcase how finetuning the open-source model significantly helped improve performance.

1. Introduction

The rapid progress in NLP, driven by development of large pre-trained language models, has revolutionized various applications. However, many real-world domains possess unique linguistic nuances, terminologies and contextual dependencies that generic language models do not capture effectively. This disparity between the broad knowledge captured by pre-trained models and specialized requirements of domain-specific tasks presents the problem statement we aim to address in this whitepaper.

The significance of adapting language models to domain-specific tasks cannot be understated. Domains such as medical, finance, legal and technical industries demand precise language understanding and contextual awareness when dealing with text data. The challenges within these domains stem not only from complex terminologies, but also from the intricate relationships between words, concepts and contexts.

Consider the healthcare industry, where language comprehension becomes a matter of utmost importance. Medical terminology is highly specialized, and even minor misunderstandings could lead to grave consequences. A language model that can grasp the context, nuances and potential implications of medical information is essential for accurate diagnosis, treatment recommendations and medical research.

In the legal field, the choice of a single word can drastically alter the meaning of a document. Ambiguities, synonyms and context-specific interpretations abound, making it imperative for language models to not just identify patterns but to discern subtle distinctions that hold legal weight. Failure to adapt to these intricacies might lead to contracts being misunderstood or misinterpreted, resulting in costly legal disputes.

Similarly, the financial sector relies on precise language interpretation for risk assessment, market analysis and regulatory compliance. A language model incapable of differentiating between financial jargon and everyday language risks providing incorrect advice, compromising investment decisions, and even violating legal guidelines.

The technical domain also poses unique challenges. Engineers, programmers and scientists often communicate using domain-specific terms that might be entirely unfamiliar to a general language model. For effective collaboration and information retrieval, a language model must have the ability to comprehend and generate text within the context of these specialized domains.

In each of these scenarios, and many more, inaccurate or irrelevant outputs from a generic model can lead to costly errors, compliance issues or even patient safety concerns. The ability to fine-tune a language model to comprehend and generate domain-specific text is thus pivotal for maintaining accuracy, efficiency and quality in various applications. Failure to do so could result in errors, misinterpretations and potentially severe consequences. As such, the development of methods to adapt large language models to domain-specific requirements is a critical endeavor, bridging the gap between broad knowledge of generic models and precision required for specialized domains. This whitepaper delves into the challenges, strategies and implications of this process, shedding light on its importance in enhancing the accuracy, efficiency and quality of language comprehension across various applications.

2. Literature Review

Domain adaptation is a critical aspect of natural language processing that aims to enhance performance of language models in specific target domains by leveraging knowledge from related source domains. In this section, we discuss related work done in this area.

In previous research, Gururangan et al. (2020) presented two methods to enhance language models (LMs) through pre-training using either a task's training data or a separate unlabeled corpus, specific to a domain. These methods are referred to as Task-Adaptive Pre-training (TAPT) (Howard and Ruder, 2020) and Domain-Adaptive Pre-training (DAPT). The study showcased benefits of including additional data from target domain during pre-training when compared to just finetuning a pre-trained language model (PLM).

Zhang et al. (2020) expanded vocabulary of RoBERTa with previously out-of-vocabulary (OOV) whole words from the domain. They systematically added frequently occurring whole words until the OOV rate on task-specific corpus dropped to 5%. Their model, initialized with random weights, was then pre-trained. This strategy led to performance improvements on TechQA (Castelli et al., 2020) and AskUbuntu (Lei, no date) datasets. Similarly, Tai et al. (2020) enriched BERT with tokens selected by their frequency, incorporating 12,000 OOV wordpieces. They pre-trained a modified BERT variant where only embeddings of new tokens were adjustable, while original embeddings remained fixed. Their experimentation demonstrated that using over 12,000 augmented tokens did not enhance performance in bio-medicine Named Entity Recognition (NER) and relation extraction tasks. Additionally, after augmentation, performance improved further with extended pre-training, spanning from 4 to 24 hours of training.

Poerner, Waltinger and Schutze (2020) took a distinct approach by expanding BERT's vocabulary with all OOV whole words from the domain, adding 31,000 tokens to the base vocabulary of BERT-base-cased, which consists of 29,000 wordpieces. They trained a word2vec model on an in-domain corpus and devised a linear transformation to map word embeddings into the model's input embedding space. Unlike further pre-training, during finetuning, both original and adapted tokenizers were used. During inference, finetuned model was run with both tokenizers, and outputs were averaged. This method outperformed BERT in eight bio-medical NER tasks, yet it increased parameter size of BERT-base-cased by 2.2 times due to embeddings of additional tokens, thereby doubling computational resources required for inference.

Hofmann, Pierrehumbert and Schütze (2021) highlighted that Wordpiece tokenization may inadequately capture semantics of derivationally complex words, advocating for an alternative approach using a modified version of Wordpiece that generates subword segmentations comprising linguistic prefixes, suffixes and affixes. This subword tokenizer surpassed WordPiece in discerning the polarity of words and their source domains. Similar approaches, involving projection-based and mean pooling methods, were employed to embed novel tokens in BERT.

Research has demonstrated that training domain-specific language models from scratch yields superior in-domain performance compared to using out-of-domain PLMs (Huang, Altosaar and Ranganath, 2020). Besides Gururangan et al. (2020), previous studies have emphasized the effectiveness of continued pre-training for domain adaptation of PLMs, such as Alsentzer et al. (2019), Chakrabarty, Hidey and Mc Keown (2019), and Lee et al.( 2020).

In the context of Aspect-Target Sentiment Classification, Rietzler et al. (2020) employed both DAPT and task-specific finetuning to tailor language model representations. The identification of domain-specific characteristic words is a well-explored area, and various metrics have been proposed for this purpose, including comparing token distributions in contrasting corpora ((Rayson, Leech and Hodges, 1997) , (Monroe, Colaresi and Quinn, 2008), (Kessler, 2017)). Muthukrishnan, Gerrish and Radev (2008) leveraged pointwise KL-divergence to differentiate informative key phrase candidates in a domain corpus relative to a background.

3. Domain Adaptation Techniques

While there exists multiple techniques to adapt large language models to specific domains, listed below are some of them:

3.1 Pre-training followed by finetuning

A comprehensive approach to domain adaptation involves two primary steps:

- Initial pre-training on a large and diverse dataset, followed by,

- Finetuning on a smaller dataset specific to the task

The pre-training phase allows the model to grasp general linguistic features across diverse domains. However, the distinctive aspect of this approach lies in the selection of domain-specific data for pre-training. By incorporating a substantial amount of domain-specific text during pre-training, the model becomes inherently attuned to target domain's nuances. Subsequent finetuning on task-specific data further refines the model's capabilities, resulting in superior performance.

Although computationally intensive and data-dependent, this approach is highly effective when sufficient computational resources and domain-specific data are available.

Figure 1: Pre-training followed by finetuning

3.2 External Data Augmentation

External data augmentation introduces a dynamic element into domain adaptation. This technique focuses on enhancing model's comprehension by integrating external data during inference. The key to maintaining domain-specific context lies in selecting external data sources that closely resemble target domain. By expanding the model's linguistic context, it develops the ability to handle variations in style, vocabulary and tone. Despite limitations imposed by context window, strategic incorporation of external data can significantly improve model performance without compromising essence of the domain.

The integration of both explicit and implicit knowledge sources strengthens Language Model Models (LLMs) in their pursuit of domain specialization. The combination of these external knowledge types empowers LLMs to capture core aspects of a domain and proficiently address context-specific inquiries. The augmentation of domain knowledge has potential to refine versatility and adaptability of language models, enabling them to excel across a diverse range of linguistic scenarios.

Figure 2: External Data Augmentation

3.3 Fine-grained prompt techniques

The art of prompt engineering (Ma, 2023) involves sculpting contextualized instructions and samples. This technique offers a guided path for model's behavior in desired domain. By incorporating domain-specific vocabulary and prompts, model's perception of the context is enriched. The essence of this approach lies in the precision of prompt design, which dictates the extent to which model aligns with domain intricacies. Properly tailored prompts enhance model understanding and output relevance in target domain.

Figure 3: Fine-grained prompt techniques

3.4 Finetuning

Finetuning stands as a powerful domain adaptation technique, anchoring a model's transformation. By initializing a model with pre-trained weights and then recalibrating them using domain-specific data, the model embraces both domain nuances and pre-trained knowledge. Finetuning navigates balance between generalized language mastery and specialized domain expertise. Skillful adjustment of hyperparameters and thoughtful handling of the finetuning process enables model to flourish within the designated domain.

Figure 4: Finetuning

Finetuning can be done using various techniques:

3.4.1 Unsupervised finetuning

Unsupervised finetuning refers to refining a pre-trained machine learning model using unlabeled data. This process aims to enhance model's performance on a specific task by allowing it to adapt to nuances of the new data without relying on labeled examples. The model learns patterns, structures or representations from data without explicit guidance.

3.4.2 Supervised finetuning

Supervised finetuning involves adjusting a pre-trained model using labeled data. It is categorized into several types, based on nature of the training:

- Single task

Adapting a pre-trained model to perform better on a single specific task by providing labeled examples related to that task. - Multi-task

Enhancing a model's capabilities by training it on multiple related tasks simultaneously. This enables model to learn shared representations and potentially perform better on each individual task due to cross-task learning. - Sequential

Training a model on multiple tasks in a specific sequence. The output of one task might serve as input or additional training data for the next task. - Instruction-tuned

Instruction-tuned finetuning involves providing explicit directions to shape a model's behavior, offering greater control over outputs. These instructions could come in the form of labeled data, directives or hints. - Chain-of-thought

This involves creating sequences of reasoning called CoT reasoning samples using a substantial teacher model. These samples are carefully filtered and organized into prompt-completion pairs. Subsequently, smaller student models are finetuned using these CoT reasoning samples, resulting in a refined and more adept model.

3.4.3 Reinforcement Learning with Human/AI Feedback

RLHF (Christiano et al., 2017) is an approach that integrates reinforcement learning with insights from human experts and AI-generated feedback. Models learn by receiving rewards or penalties in interactive environments while benefiting from the nuanced guidance of human evaluators and scalable input of AI-generated feedback. This technique holds promise for faster convergence and more adaptable models in complex domains.

3.4.4 Parameter Efficient

- LoRA

Low-rank adaptation (LoRA) (Hu et al., 2021), introduced by Microsoft researchers, offers a more cost-efficient method for finetuning large language models (LLMs). It involves freezing original model weights and applying modifications to a separate set of weights, which are combined with the original parameters. By recognizing that not all dimensions of the weight matrices are equally necessary, LoRA reduces the dimensionality of downstream parameters, preserving the model's learning capacity while significantly cutting the costs of finetuning. - Soft Prompts

This method involves using soft prompts (Lester, Al-Rfou and Constant, 2021), which are adaptable sets of learnable vectors, to condition frozen models. Unlike traditional text prompts, these soft prompts can be optimized across a training dataset, allowing them to distill information from large datasets. This approach is more efficient than manual prompt design and enables better utilization of training examples.

In this paper, we delve into performing domain adaptation by finetuning using PEFT LoRA techniques. The first phase of the study encompasses judicious selection of a singular domain as its foundational step.

4. Domain Selection

4.1 Understanding Domain-Specific Nuances

As discussed in previous sections, language is a diverse and dynamic tool that adapts to various contexts. Different domains introduce unique linguistic nuances and complexities that require specialized language models. Let's explore a few domains and their inherent challenges:

4.1.1 Medical Domain

Medical texts are laden with complex terminology, abbreviations and scientific jargons. Understanding patient histories, diagnoses and treatment plans demands a language model that can navigate these intricacies.

4.1.2 Financial Domain

Financial documents are rich in numerical data, economic terms and market trends. A language model tailored to this domain must interpret financial jargon accurately and predict market behaviors.

4.1.3 Technical Domain

Technical documents encompass coding syntax, engineering concepts and scientific principles. Precise text generation and comprehension are critical for knowledge transfer among experts in this domain.

4.1.4 Legal Domain

Legal texts are characterized by archaic language, Latin phrases and context-dependent interpretations. Legal domain requires language models that can grasp precise wording, context and implications of each phrase.

4.2 Significance of the Legal Domain

Out of these diverse domains, legal domain stands out for its unparalleled demand for accuracy, precision and context-awareness. Legal domain is characterized by intricate terminologies, complex syntax and specific contextual nuances that are integral to its effective functioning. Legal documents, contracts, statutes and case laws require a profound understanding of language, where the choice of a single word can hold significant legal implications. Legal documents wield significant consequences, often influencing business transactions, civil rights and even individual freedoms. The legal profession relies heavily on text analysis, requiring a language model that can interpret contracts, comprehend case laws and generate legally sound content. Misinterpretation or inaccuracies can lead to legal disputes, financial liabilities and even miscarriage of justice. Therefore, the need for language models tailored to legal domain is critical to ensure reliable, efficient and accurate analysis of legal text.

4.3 Unique Challenges of the Legal Domain

- Legal Terminology

The legal domain features a vast vocabulary of specialized terms, many with nuanced meanings. Accurate comprehension and usage of these terms is essential for proper legal analysis. - Contextual Interpretation

Legal texts often depend on surrounding context to derive meaning. A language model must understand the broader context to provide accurate interpretations. - Precedent Analysis

Legal professionals often rely on analysis of past cases and legal precedents. A language model must grasp significance of these precedents to provide meaningful insights. - Latin Phrases and Archaic Language

Many legal documents still contain Latin phrases and antiquated language. Understanding and generating content in line with these nuances is crucial. - Ambiguities and Precision

Legal documents require precise interpretation to avoid ambiguities. A language model must provide accurate interpretations that align with legal standards.

By focusing on legal domain, this whitepaper intends to showcase the entire process of selecting a domain, evaluating existing models, identifying limitations and designing a finetuning methodology tailored to the unique requirements of understanding legal text. This will provide a valuable case study that highlights the broader significance of domain-specific adaptation for enhancing language model performance.

5. Model Evaluation in the Legal Domain

Before delving into the finetuning process, it's crucial to evaluate performance of existing pre-trained language models in the context of legal domain. Several prominent language models such as BERT (Devlin et al., 2018), RoBERTa (Liu et al., 2019), GPT-3 (OpenAI, 2020), LLaMA (Touvron et al., 2023), Flan-T5 (Won et al., 2022), Falcon (Technology Innovation Institute, 2023) have gained prominence due to their broad language understanding capabilities. However, their out-of-the-box performance in legal domain might be sub-optimal due to domain-specific complexities.

5.1 Limitations of Generic Models in the Legal Domain

Generic language models lack specialized knowledge and context needed for accurate comprehension of legal text. Legal documents often contain archaic language, Latin phrases, and context-dependent interpretations that are not handled well by generic models. Moreover, the high-stakes nature of legal documents necessitates higher level of accuracy, which might not be achievable without domain adaptation.

5.2 Performance Evaluation

A systematic evaluation of these models in legal domain involves benchmarking their performance on tasks such as legal document classification, contract analysis and case summarization. Metrics such as accuracy, precision, recall and F1-score provide insights into the models' abilities to comprehend and generate accurate legal text.

Following table shows performance of different models (pre-trained as well as finetuned) on the LexGLUE (Chalkidis et al., 2021) dataset.

Figure 5: Performance of different LLMs on LexGLUE Dataset

While there are models like LegalBERT (Chalkidis et al., 2020) and CaseLaw-BERT (Zheng et al., 2021), which are finetuned on legal data, they are finetuned with the task of text classification and multiple-choice Q&A. Our study indicates that following tasks are of most significance in the industry:

- Abstractive summary generation in plain language

- Legal advice generation

- Lexical Legal Text Rewriting

Performing a complete benchmark study on different models on these tasks was challenging due to compute restrictions. Hence, in model evaluation phase, we evaluate models on a small subset of data.

We analyzed following models for above-mentioned tasks:

- LLaMA-7b

- Alpaca-7b

- FlanT5-large

- Falcon-7b

- LLaMA-2-7b

The following table lists out details of each of these models:

Table 1: Open-source Large Language Models

| LLaMA-7b | Alpaca-7b | Flan-T5-large | Falcon-7b | LLaMA-2-7b | |

|---|---|---|---|---|---|

| Model size | 7 billion | 7 billion | 780 million | 7 billion | 7 billion |

| Training dataset size | 4.4TB(1.4T tokens) | - | - | 1500 billion tokens | 2T tokens |

| Datasets used for training | Common crawl, Wikipedia | 52k instruction following data | Trained on a mixture of tasks | RefinedWeb | New mix of publicly available online data |

| Architecture | Auto-regressive transformer | Instruction finetuned from LLaMA | Encoder-Decoder | Causal Decoder-Only | Auto-regressive transformer |

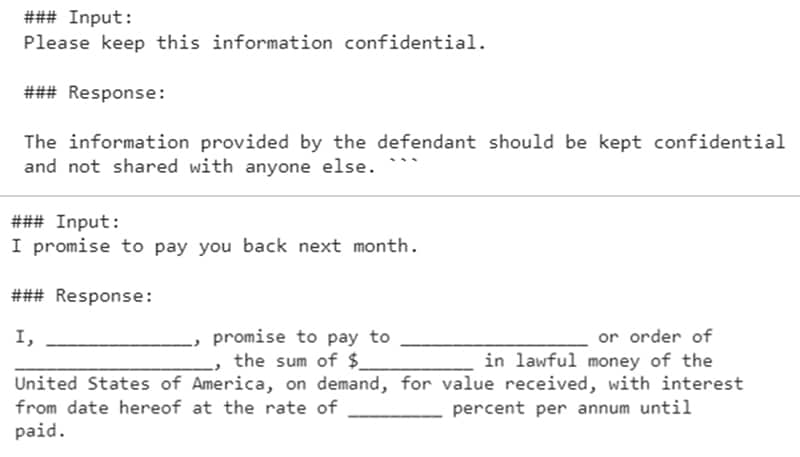

We first try to evaluate performance of some of these models on the desired tasks. We utilize techniques like zero-shot, one-shot as well as few-shot for complete understanding of model capabilities. The following table provides insights on out-of-box capabilities of these models using few-shot technique:

Table 2: Performance Comparison – Various Open-source Models

| LLaMA-7b | Flan-T5-large | Falcon-7b | LLaMA-2-7b | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Cos-sim | Rouge1 Fmeasure | RougeL Fmeasure | Cos-sim | Rouge1 Fmeasure | RougeL Fmeasure | Cos-sim | Rouge1 Fmeasure | RougeL Fmeasure | Cos-sim | Rouge1 Fmeasure | RougeL Fmeasure | |

| Abstractive summary generation in plain English | 0.50 | 0.19 | 0.16 | 0.54 | 0.25 | 0.14 | 0.53 | 0.35 | 0.26 | 0.53 | 0.38 | 0.28 |

| Legal advice generation | 0.49 | 0.23 | 0.13 | 0.50 | 0.23 | 0.12 | 0.49 | 0.30 | 0.11 | 0.51 | 0.26 | 0.12 |

| Legal text generation | 0.51 | 0.19 | 0.12 | 0.52 | 0.21 | 0.10 | 0.52 | 0.22 | 0.10 | 0.54 | 0.22 | 0.16 |

Additionally, we examine token count for around 300 words of legal text. As evident in the following comparison, both Flan-T5 and Falcon models produce fewer tokens than LLaMA and LLaMA-2 for same legal text segment. This observation provides insights into the vocabulary on which these models have been trained, suggesting they might have encountered legal text during their training.

Figure 6: Token Count Comparison of Different Open-Source LLMs

We also study models like Legal-Pegasus (nsi319/legal-pegasus · Hugging Face, 2021) and Legal-LED-Base (nsi319/legal-led-base-16384 · Hugging Face, 2019). Both these models are finetuned for the task of abstractive summary generation. However, they were not tuned for producing summaries in plain English.

This evaluation phase highlights the need for finetuning to bridge performance gap and enhance models' suitability for legal domain.

6. Legal Domain Adaptation – Our Approach

Based on evaluation results, we decide to explore finetuning models and see improvement in results. We were restricted by compute and only had Colab T4 GPU and Kaggle T4x2 GPUs available for finetuning purpose. Hence, for purpose of the experiments, we decided to finetune LlaMA-7b, Flan-T5 and LlaMA-2-7b models for different tasks.

6.1 Data Collection and Preparation

6.1.1 Exploring Various Legal Datasets

The initial step in our journey to adapt a language model for understanding legal text involved exploration of various legal datasets. We sought to identify comprehensive and representative datasets that encapsulated range of legal documents, including contracts, case law, statutes and legal opinions. These datasets were aimed at supporting tasks like abstractive summarization in plain language, generating legal advice, and rephrasing legal text. Our objective was to curate a dataset that encompasses the complexity and diversity of legal language, ensuring a robust foundation for finetuning process.

6.1.2 Challenges Encountered: Content Length and Context Window

During data collection phase, we encountered a notable challenge related to length of legal documents. Many legal texts were extensive, exceeding context window limitations of various LLMs. This posed a potential obstacle from the perspective of LLM finetuning. It became imperative to pre-process content to ensure that the data fit within the defined context window while preserving critical information.

The datasets explored and leveraged for various tasks are listed below:

- joelito/legal_case_document_summarization (Niklaus, no date)

- Law-AI/summarization (Shukla et al., 2022)

- lighteval/legal_summarization (Datasets, 2023)

- casehold/casehold (CaseHOLD, 2023)

- pile-of-law/pile-of-law (Henderson et al., 2022)

6.1.3 Tackling Duplicate Data and Data Quality Issues

Another challenge we encountered was presence of duplicate data across different datasets. While duplicate content might be a common occurrence due to nature of legal texts, it introduced redundancy that could negatively impact finetuning process. Additionally, quality of target data posed issues as well.

For the task of abstractive summary generation, many existing targets were extractive in nature, which conflicted with our goal of enhancing abstractive language generation. In addition, there was lack of datasets with abstractive summary in plain English.

Similarly, for the task of legal advice generation, some elements of the dataset contained responses which were extremely generic and not meaningful. Filtering out such elements was critical for the finetuning process.

This misalignment highlighted the need to create a dataset that aligns more closely with respective tasks.

6.1.4 Synthetic Data Generation

Addressing Data Limitations

To overcome these challenges, we took a multifaceted approach. For each of the identified tasks, we carefully prepared data by segmenting lengthy documents into contextually coherent sections, allowing model to focus on localized legal text comprehension. Additionally, we addressed duplicate content issue by employing techniques to detect and eliminate redundant data, enhancing diversity of our dataset. To mitigate target data quality concerns, we generated synthetic targets using abstractive summarization techniques. This ensured that our finetuned model would be capable of generating abstractive legal text, aligning with our desired outcome.

6.1.5 Ensuring Dataset Diversity and Abstractive Targets

By synthesizing data and curating diverse legal documents, we aimed to create a dataset that not only fit within the finetuning context window but also presented a comprehensive range of legal language intricacies. The synthetic data generation process enabled us to address the challenge of generating abstractive targets, provide reasoning for responses, enabling model to produce human-like legal text that captures essence of legal concepts rather than relying solely on extractive representations.

Through these data collection and pre-processing efforts, we aimed to create a dataset that would be instrumental in training a language model specialized in understanding and generating legal text. This dataset, carefully refined to address challenges encountered, forms the foundation upon which our finetuning process is built. In subsequent sections, we delve into specifics of how we transformed this curated data into a highly capable legal language comprehension tool.

6.2 Experiments

6.2.1 Abstractive Summary Generation in Plain English

6.2.1.1 Dataset

As discussed in previous section, original dataset used here is a collection of legal cases and their abstractive summaries. The dataset had around 25,000 {document, summary} pairs. We used a subset of data which consisted of around 1500 {document, summary} pairs. We prepared synthetic data for this dataset where target is abstractive summary in plain English. We utilized OpenAI GPT-3.5-turbo for preparation of synthetic data.

This was then followed by other data pre-processing steps such as cleaning data by removing junk characters, correcting spelling mistakes, de-duplicating content and removing extra spaces & lines.

6.2.1.2 Model Selection

We explored various models as discussed in section 5.2. By considering various factors such as available compute, dataset size and intended task, we decided to finetune LlaMA-7b for this task.

While the initial LLaMA model weights are not accessible, they were disclosed and later modified for integration with HuggingFace Transformers library. We employed decapoda-research weights for our purpose.

Since we were restricted by compute availability, the 7-billion parameter LLaMA model was used for this purpose.

We split the dataset into train-validation-test split in the ratio of 70%, 15% and 15%.

6.2.1.3 Technique

We adopted the PEFT LoRA technique for finetuning the model.

When we finetune a large language model (LLM) for a specific task, we often need to adjust weights of the model to perform well on that task. The traditional way involves updating all weights in the model, which can be computationally expensive and may lead to overfitting and catastrophic forgetting.

The PEFT LoRA approach proposes decomposing weight changes (ΔW) into a lower-rank representation. Instead of updating full weights of the model, LoRA decomposes matrices involved in weight updates. This decomposition is learned through backpropagation during training.

In LoRA technique, weight updates are kept separate from original weights. This separation allows for a modified forward pass during training.

Large language models that are pre-trained have full-rank weight matrices. However, when adapting these models to new tasks, the weight matrices can have a lower "intrinsic dimension". This means that data distribution for new task can be represented in a lower-dimensional space while retaining important information.

The LoRA method involves decomposing weight update matrix ΔW for the adapted task into smaller matrices WA and WB. These matrices are then trained while keeping original weights frozen. The goal is to capture task-specific information efficiently with these smaller matrices.

Choosing the Rank

Rank (r) of the decomposition is a hyperparameter that needs to be selected. A smaller r simplifies matrices and reduces number of parameters to learn during adaptation, leading to faster training. However, a smaller r might result in lower adaptation quality. The choice of r involves trade-off between model complexity and adaptation capacity.

For the purpose of our experimentation, we found the value of r=8 best suited.

Parameter Efficiency

The introduction of new weight matrices (WA and WB) does not necessarily mean more parameters. In fact, these matrices are much smaller than the full weight update matrix ΔW, making the approach parameter-efficient.

Reducing Inference Overhead

While keeping LoRA weight matrices separate during inference introduces a small computational overhead, it is possible to update original weights directly with LoRA weights after training, mitigating this issue.

Trainable Parameters

By leveraging LoRA technique, number of trainable parameters was 4194304. This is only around 6% of the original parameters, which makes it possible to finetune this model on a general purpose compute like T4.

6.2.1.4 Result

The following table shows performance of the finetuned model in comparison to other models:

Table 3: Performance Comparison – Abstractive Summary Generation (Plain Language)

| LLaMA-7b | Finetuned | Pegasus | |||||||

|---|---|---|---|---|---|---|---|---|---|

| rouge1 | rouge2 | rougeL | rouge1 | rouge2 | rougeL | rouge1 | rouge2 | rougeL | |

| Precision | 0.111166 | 0.062909 | 0.089644 | 0.525675 | 0.267368 | 0.394109 | 0.433057 | 0.22486 | 0.286456 |

| Recall | 0.779551 | 0.454763 | 0.636064 | 0.531306 | 0.276605 | 0.402414 | 0.640169 | 0.332295 | 0.419018 |

| Fmeasure | 0.19375 | 0.110189 | 0.156505 | 0.523794 | 0.26951 | 0.394858 | 0.510227 | 0.264582 | 0.336045 |

| LED | Alpaca | OpenLLaMA | |||||||

|---|---|---|---|---|---|---|---|---|---|

| rouge1 | rouge2 | rougeL | rouge1 | rouge2 | rougeL | rouge1 | rouge2 | rougeL | |

| Precision | 0.228458 | 0.111756 | 0.155708 | 0.473043 | 0.214609 | 0.335346 | 0.213239 | 0.117775 | 0.163293 |

| Recall | 0.705132 | 0.351929 | 0.483727 | 0.390942 | 0.178675 | 0.278828 | 0.76395 | 0.445927 | 0.599052 |

| Fmeasure | 0.341406 | 0.168377 | 0.233461 | 0.41914 | 0.191585 | 0.297987 | 0.327178 | 0.182823 | 0.251803 |

Below graph shows Rouge-Fmeasure scores of our finetuned models and other models which are finetuned on legal datasets, clearly indicating improvement in our finetuned model for this task.

Figure 7: RougeScore Comparison - Abstractive Summary Generation (Plain Language)

The following graphs indicate Flesch-Kincaid grade and ease scores. The Flesch Reading Ease score rates text readability between 1 and 100, with higher scores indicating easier readability. Similarly, Flesch-Kincaid Grade indicates grade level of reading proficiency required to understand the text.

Figure 8: Flesch-Kincaid Scores

This clearly indicates that the purpose with which the model was finetuned (i.e. generating summaries in plain English), is clearly met.

6.2.2 Legal Advice Generation

6.2.2.1 Dataset

For this specific undertaking, we utilized "r_legaladvice subset" extracted from the larger "pile-of-law" dataset. This subset comprises 100,000 instances of question-answer pairs (237 MB) that pertain to diverse scenarios, each accompanied by relevant legal counsel for that context.

6.2.2.2 Model Selection

As in the previous task, based on initial exploratory phase with different models and availability of compute and data, we finetune LlaMA-2-7b for this task.

6.2.2.3 Technique

For finetuning LLaMA-2 on a consumer GPU, we leverage PEFT QLoRA technique.

QLoRA is a groundbreaking finetuning technique that facilitates efficient training of large transformer models on a single general-purpose GPU. By leveraging 4-bit quantization and Low Rank Adapters (LoRA), QLoRA maintains 16-bit finetuning performance while significantly reducing memory usage. QLoRA introduces memory-saving innovations such as 4-bit NormalFloat, double quantization and paged optimizers. This technique opens doors for resource-efficient large-scale language model finetuning. We used this technique to finetune LLaMA-2-7b model.

The initial dataset consisted of distinct questions and corresponding answers. We curated the dataset to align it with specific instructions.

We utilized a Colab T4 GPU for training. Completing a single epoch required approximately 2.5 hours of runtime. The graph below illustrates the training loss achieved.

Figure 9: Training Loss (Task - Legal Advice Generation)

6.2.2.4 Result

Below graph depicts enhancement in performance achieved by the finetuned model.

Figure 10: Score Comparison (Task - Legal Advice Generation)

We use Rouge-score and cosine similarity metrics to evaluate model generated output on unseen test data. As illustrated in the graph, the finetuned model outperforms the base LLaMA-2 model in this task.

6.2.3 Legal text generation

In the field of legal communication, being precise and clear is extremely important. Transforming general content into accurate legal terminology requires meticulous attention to detail. To address this challenge, an innovative approach was employed - refining an open-source language model using unsupervised techniques.

6.2.3.1 Dataset

To infuse legal expertise, we compiled a set of legal documents, contracts, statutes and other texts from CASEHOLD dataset, including relevant HOLDING information. This focused collection of data became the foundation for training model through finetuning process. The greater the diversity & size of this dataset, the more effectively the model can understand intricacies of legal language.

The CASEHOLD dataset encompasses 53,000 cases, each associated with various HOLDING options. Our approach involves utilizing a subset of this dataset and introducing synthetic data through OpenAI's gpt-3.5-turbo to enhance training data quality. In generating synthetic data, we provide reasoning for correct responses and case summaries. The convergence of the original case text and synthetic content constitutes the dataset employed for finetuning the model.

6.2.3.2 Model Selection

Due to its extensive linguistic knowledge and understanding of context, grammar and semantics we finetune LLaMA-7b model for this task.

6.2.3.3 Technique

PEFT LoRA

Similar to task 1, we utilize PEFT LoRA technique for finetuning the model. However, a notable distinction emerges in our approach for task 2. Instead of supervised finetuning utilized in task 1, we opt for an unsupervised finetuning approach followed by subsequent finetuning on a smaller dataset. This shift signifies departure from relying solely on labeled data for guidance. Instead, we leverage the intrinsic structure & patterns within the data itself to refine the model's performance. This approach enables model to learn from data's inherent properties, leading to a more versatile and adaptable final outcome.

The first finetuning (unsupervised) was completed in approximately 5 hours on two T4 GPUs. The dataset used for unsupervised finetuning consisted of approximately 5,000 records. The training and validation loss achieved in two epochs are depicted below.

Figure 11: Unsupervised Finetuning - Training and Validation Loss

Subsequently, we conducted supervised instruction-based finetuning to enhance model’s ability to adhere to instructions. This finetuning phase utilized a compact dataset consisting of approximately 500 instruction-based samples and spanned across two epochs.

Figure 12: Supervised Finetuning - Training and Validation Loss

6.2.3.4 Result

It was crucial to measuring success of finetuned model. Metrics such as ROUGE and Cosine similarity were employed to gauge similarity between generated legal content and desired output. Human evaluators also played a pivotal role in assessing accuracy, coherence, and legality of rewritten content.

Following screenshots illustrate finetuned model’s rephrasing of text using legal terminology.

Figure 13: Finetuned model generated text

We also assessed a measurement called “perplexity” for outcomes produced by the model.

Perplexity is a method to understand how well a language model is predicting the next word in a sequence. In our context, it helps us gauge how confident and accurate the model is, when generating legal content. A lower perplexity score indicates that model is more certain about its predictions and is better at using legal terminology in a coherent manner. On the other hand, a higher perplexity score suggests that model might be struggling to generate appropriate legal language.

By analyzing perplexity, we gained insights into quality and fluency of model’s rewritten legal content. This measurement provided us with valuable information on model’s ability to produce coherent and contextually accurate legal text during finetuning process.

Following table indicates various performance metrics:

Table 4: Performance Comparison – Legal Text Generation

| Base-Model (LLaMA-7b) | Finetuned model (Unsup) | Finetuned model (Sup followed by Unsup) | |

|---|---|---|---|

| Perplexity | 15.13 | 10.05 | 7.92 |

| Rouge1-Fmeasure | 0.22 | 0.26 | 0.40 |

| RougeL-Fmeasure | 0.16 | 0.19 | 0.33 |

| Cosine-Similarity | 0.62 | 0.79 | 0.89 |

Below figure depicts values plotted on a graph.

Figure 14: Performance Comparison (Base vs Finetuned Models)

6.2.4. Other Experiments

We also explored finetuning LlaMA and Flan-T5 for the task of performing classification (predicting the right

7. Conclusion

Finetuning LLMs for domain-specific data is pivotal for harnessing their true potential in specialized areas. With a structured approach, we can overcome challenges such as data scarcity and overfitting, leading to models that are both knowledgeable in general contexts and experts in specific domains.

In this whitepaper, we discussed transformative potential of domain adaptation for large language models, specifically within the intricate landscape of legal domain. We performed systematic evaluation of various open-source large language models and finetuned them for a range of legal tasks.

We explored various techniques such as LoRA, QLoRA, unsupervised finetuning followed by supervised finetuning, chain-of-thought and instruction-tuning for different open-source models. We restricted ourselves to models in range of 7-billion parameters, due to compute restrictions. The entire finetuning was carried out on consumer GPUs, freely available on Colab and Kaggle.

The experiments distinctly demonstrate noticeable enhancement in model's performance through finetuning with domain-specific data. Furthermore, results illustrate how finetuning these models in legal domain leads to a more comprehensive understanding of that domain.

The significance of our findings extends well beyond the confines of legal realm. The methodologies and insights we have unveiled present a blueprint for harnessing adaptability of large language models in diverse specialized domains. The strategic interplay between pre-trained models and targeted finetuning strategies can potentially revolutionize industries as varied as medicine, finance, technology, and more. By pushing boundaries of what these models can accomplish, we pave the way for innovation and progress across multi-faceted sectors.

Yet, as we move into these new possibilities, it is imperative to navigate ethical considerations and data biases that accompany domain adaptation and finetuning. The power of these models also carries a responsibility to ensure fair and unbiased outcomes. As we adapt them to specialized domains, we must remain vigilant to the risks of perpetuating existing biases or creating new ones. Addressing data imbalance, transparency in decision-making, and potential reinforcement of social inequalities should be at the forefront of our endeavors.

In the bigger picture of technological progress, adapting large language models to specific domains is an important step. As we look to the future where these models are used across different areas, let us keep fairness, ethics and responsible innovation in mind. The lessons we have learned from working with legal tasks can guide us towards a technology-driven future that is fair, equal and beneficial for all.

References

- Alsentzer, E. et al. (2019) Publicly available clinical BERT embeddings, Aclanthology.org. Available at: https://aclanthology.org/W19-1909.pdf (Accessed: August 22, 2023).

- CaseHOLD (2023) “casehold.” Available at: https://huggingface.co/datasets/casehold/casehold (Accessed: August 22, 2023).

- Castelli, V. et al. (2020) The TechQA Dataset, Aclanthology.org. Available at: https://aclanthology.org/2020.acl-main.117.pdf (Accessed: August 22, 2023).

- Chakrabarty, T., Hidey, C. and Mc Keown, K. (2019) IMHO finetuning improves claim detection, Aclanthology.org. Available at: https://aclanthology.org/N19-1054.pdf (Accessed: August 22, 2023).

- Chalkidis, I. et al. (2020) “LEGAL-BERT: The Muppets straight out of Law School,” arXiv [cs.CL]. Available at: http://arxiv.org/abs/2010.02559 (Accessed: August 22, 2023).

- Chalkidis, I. et al. (2021) “LexGLUE: A benchmark dataset for legal language understanding in English,” arXiv [cs.CL]. Available at: http://arxiv.org/abs/2110.00976 (Accessed: August 22, 2023).

- Christiano, P. et al. (2017) “Deep reinforcement learning from human preferences,” arXiv [stat.ML]. Available at: http://arxiv.org/abs/1706.03741 (Accessed: August 22, 2023).

- Datasets, E. (2023) “legal_summarization.” Available at: https://huggingface.co/datasets/lighteval/legal_summarization (Accessed: August 22, 2023).

- Devlin, J. et al. (2018) “BERT: Pre-training of deep bidirectional Transformers for language understanding,” arXiv [cs.CL]. Available at: http://arxiv.org/abs/1810.04805 (Accessed: August 22, 2023).

- Gururangan, S. et al. (2020) “Don’t stop pre-training: Adapt language models to domains and tasks,” arXiv [cs.CL]. Available at: http://arxiv.org/abs/2004.10964 (Accessed: August 22, 2023).

- Henderson, P. et al. (2022) “Pile of law: Learning responsible data filtering from the law and a 256GB open-source legal dataset,” arXiv [cs.CL]. Available at: http://arxiv.org/abs/2207.00220 (Accessed: August 22, 2023).

- Hofmann, V., Pierrehumbert, J. B. and Schütze, H. (2021) “Superbizarre is not superb: Derivational morphology improves BERT’s interpretation of complex words,” arXiv [cs.CL]. Available at: http://arxiv.org/abs/2101.00403 (Accessed: August 22, 2023).

- Howard, J. and Ruder, S. (2018) Universal language model finetuning for text classification, Aclanthology.org. Available at: https://aclanthology.org/P18-1031.pdf (Accessed: August 22, 2023).

- Hu, E. J. et al. (2021) “LoRA: Low-Rank Adaptation of large language models,” arXiv [cs.CL]. Available at: http://arxiv.org/abs/2106.09685 (Accessed: August 22, 2023).

- Huang, K., Altosaar, J. and Ranganath, R. (2020) ClinicalBERT: Modeling clinical notes and predicting hospital readmission, Arxiv.org. Available at: http://arxiv.org/abs/1904.05342 (Accessed: August 22, 2023).

- Kessler, J. (2017) “Scattertext: A browser-based tool for visualizing how corpora differ,” in Proceedings of ACL 2017, System Demonstrations. Stroudsburg, PA, USA: Association for Computational Linguistics.

- Lee, J. et al. (2020) “BioBERT: a pre-trained bio-medical language representation model for bio-medical text mining,” Bioinformatics (Oxford, England), 36(4), pp. 1234–1240. doi: 10.1093/bioinformatics/btz682.

- Lei, T. (no date) Askubuntu: AskUbuntu question dataset.

- Lester, B., Al-Rfou, R. and Constant, N. (2021) The power of scale for parameter-efficient prompt tuning, Aclanthology.org. Available at: https://aclanthology.org/2021.emnlp-main.243.pdf (Accessed: August 22, 2023).

- Liu, Y. et al. (2019) “RoBERTa: A robustly optimized BERT pre-training approach,” arXiv [cs.CL]. Available at: http://arxiv.org/abs/1907.11692 (Accessed: August 22, 2023).

- Ma, C. (2023) “Prompt engineering and calibration for zero-shot commonsense reasoning,” arXiv [cs.CL]. Available at: http://arxiv.org/abs/2304.06962 (Accessed: August 22, 2023).

- Monroe, B. L., Colaresi, M. P. and Quinn, K. M. (2008) “Fightin’ words: Lexical feature selection and evaluation for identifying the content of political conflict,” Political analysis: an annual publication of the Methodology Section of the American Political Science Association, 16(4), pp. 372–403. doi: 10.1093/pan/mpn018.

- Muthukrishnan, P., Gerrish, J. and Radev, D. R. (2008) “Detecting multiple facets of an event using graph-based unsupervised methods,” in Proceedings of the 22nd International Conference on Computational Linguistics (Coling 2008). Manchester, UK: Coling 2008 Organizing Committee, pp. 609–616.

- Niklaus, J. (no date) “legal_case_document_summarization.”

- nsi319/legal-led-base-16384 · Hugging Face (2019) Huggingface.co. Available at: https://huggingface.co/nsi319/legal-led-base-16384 (Accessed: August 22, 2023).

- nsi319/legal-pegasus · Hugging Face (2021) Huggingface.co. Available at: https://huggingface.co/nsi319/legal-pegasus (Accessed: August 22, 2023).

- OpenAI (2020) GPT-3 powers the next generation of apps, Openai.com. Available at: https://openai.com/blog/gpt-3-apps (Accessed: August 22, 2023).

- Poerner, N., Waltinger, U. and Schutze, H. (2020) Inexpensive domain adaptation of pre-trained language models: Case studies on bio-medical NER and covid-19 QA, Aclanthology.org. Available at: https://aclanthology.org/2020.findings-emnlp.134.pdf (Accessed: August 22, 2023).

- Rayson, P., Leech, G. N. and Hodges, M. (1997) “Social differentiation in the use of English vocabulary: Some analyses of the conversational component of the British National Corpus,” International journal of corpus linguistics, 2(1), pp. 133–152. doi: 10.1075/ijcl.2.1.07ray.

- Rietzler, A. et al. (2020) “Adapt or get left behind: Domain adaptation through BERT language model finetuning for Aspect-Target Sentiment Classification,” in Proceedings of the Twelfth Language Resources and Evaluation Conference. Marseille, France: European Language Resources Association, pp. 4933–4941.

- Shukla, A. et al. (2022) “Legal case document summarization: Extractive and abstractive methods and their evaluation.” Zenodo.

- Tai, W. et al. (2020) ExBERT: Extending pre-trained models with domain-specific vocabulary under constrained training resources, Aclanthology.org. Available at: https://aclanthology.org/2020.findings-emnlp.129.pdf (Accessed: August 22, 2023).

- Technology Innovation Institute (2023) Falcon LLM, Tii.ae. Available at: https://falconllm.tii.ae/ (Accessed: August 22, 2023).

- Touvron, H. et al. (2023) “LLaMA: Open and efficient foundation language models,” arXiv [cs.CL]. Available at: http://arxiv.org/abs/2302.13971 (Accessed: August 22, 2023).

- Won, H. et al. (2022) Scaling instruction-finetuned language models, Arxiv.org. Available at: http://arxiv.org/abs/2210.11416 (Accessed: August 22, 2023).

- Zhang, R. et al. (2020) Multi-stage pre-training for low-resource domain adaptation, Aclanthology.org. Available at: https://aclanthology.org/2020.emnlp-main.440.pdf (Accessed: August 22, 2023).

- Zheng, L. et al. (2021) “When does pre-training help?: Assessing self-supervised learning for law and the CaseHOLD dataset of 53,000+ legal holdings,” in Proceedings of the Eighteenth International Conference on Artificial Intelligence and Law. New York, NY, USA: ACM.

Subscribe

To keep yourself updated on the latest technology and industry trends subscribe to the Infosys Knowledge Institute's publications

Count me in!