Data

Distributed messaging systems – from traditional queues to stream processing

Technology advances in Event Streaming platforms have the potential to supercharge messaging infrastructure at global scale. This whitepaper discusses the limitations of legacy messaging platforms, lays down a blueprint for Events First system architecture & discusses key technology considerations to embrace this new architecture paradigm.

Insights

- Traditional messaging systems such as MQ (Message Queue) are difficult to scale up due to inherent challenges related to latency & data consistency.

- Advances in Events First architecture based on Kafka & Event Streaming technologies equip organizations to scale-up globally while ensuring data consistency, durability & real-time data availability.

- This whitepaper lays down a blueprint for an Events First software architecture.

- It further discusses solution landscape & key considerations for an effective Events First software architecture.

Introduction

Within the ever-evolving financial services landscape, real-time, high-fidelity data exchange is a mission-critical factor for industry prosperity. Consequently, low-latency messaging platforms have become the cornerstone of financial institutions' communication architecture, facilitating the secure & efficient flow of sensitive information.

This white paper delves into the historical trajectory of messaging systems, tracing their development from legacy message queuing protocols towards real-time event streaming architecture.

Financial messaging systems consist of robust, low-latency message queuing & event streaming platforms. These platforms facilitate real-time dissemination of high-fidelity data across disparate applications within the financial ecosystem. This, in turn, enables instantaneous communication for seamless integration of various financial service components.

Financial messaging landscape has undergone a significant transformation, driven by the industry's escalating dependence on high-performance, low-latency communication middleware. Legacy messaging platforms have been superseded by new age platforms such as Apache Kafka, Red Hat AMQ & cloud-based platforms like Azure Event Hubs, Amazon Kinesis & Google Cloud Pub/Sub. This transformation has opened new avenues for data hungry use-cases such as Machine Learning, real-time Big Data analytics, complex event routing as well as simple B2B (Business to Business) or B2C (Business to Consumer) integrations.

The financial messaging landscape was initially defined by the dominance of asynchronous messaging paradigms, such as point-to-point queuing & topic-based pub/sub architectures. These foundational message exchange protocols served as the communication backbone for their respective eras. This historical context provides a valuable foundation for understanding the current complexities & potential innovations surrounding messaging systems in financial services.

Challenges & Limitations of Traditional Messaging Systems in Financial Services

Legacy messaging protocols, once the bedrock of communication architectures within financial services, now grapple with a multitude of constraints that impede their efficacy in the age of high-frequency, data-centric operations.

Traditional messaging systems based on JMS (Java Message Service) often encounter limitations in message delivery latency, scalability, & data consistency.

This section delves into the limitations inherent to traditional messaging systems.

Latency:

Traditional messaging systems, inherently constrained by their store-&-forward processing paradigm, frequently struggle with message delivery latency, which impedes the high-velocity execution of financial transactions. Within the financial services landscape, where time-sensitive decision-making & expedited response intervals are paramount, latency emerges as a significant bottleneck.

Specific Challenges:

Order Execution Delays: The inherent store-&-forward paradigm employed by traditional message queuing systems can introduce message delivery delays, consequently impacting the time-to-completion of financial transactions.

Real-Time Market Data: In high-frequency trading environments where real-time dissemination of market data is paramount, even minor publish-subscribe message delivery latency can lead to information asymmetry, potentially delaying access to critical updates for traders & market participants.

A brokerage firm leveraging a legacy message queuing infrastructure consistently encountered execution delays for high-frequency trades due to message delivery latency. These latency issues resulted in missed market opportunities & suboptimal order fulfillment.

Scalability:

Traditional messaging systems exhibit limitations in their capacity to accommodate surging data volumes & dynamically fluctuating workloads. The monolithic architectures, once sufficient for the demands of their era, struggle to adapt to the ever-evolving, high-velocity financial ecosystem.

Specific Challenges:

Volume of Transactions: As financial services witness a surge in transaction volumes, traditional message queues may encounter difficulties scaling horizontally to accommodate the increased workload.

Dynamic Subscriber Base: Publish-subscribe systems may face scalability issues when the number of subscribers dynamically fluctuates, impacting the ability to efficiently distribute messages.

Organizations using point-to-point messaging architecture encounter significant challenges in scaling their infrastructure to accommodate the burgeoning customer base. This results in system outages during peak transaction volumes.

Data Consistency:

Maintaining data consistency across distributed systems is a key requirement in the financial services sector. Traditional messaging systems, however, may face challenges in ensuring that all nodes within the system have consistent & up-to-date information. If a message is added to a queue but not consumed before it expires or the queue becomes full, the message could be lost. This can happen if the queue is not monitored or if there is a sudden surge in traffic that overwhelms the queue.

Specific Challenges:

Transaction Integrity: Maintaining data integrity for financial transactions within traditional message queuing systems becomes increasingly intricate across numerous distributed nodes. This complexity can introduce discrepancies in the persisted state of the transactions.

Synchronization Issues: Publish-subscribe architectures may face difficulties in ensuring data synchronization across a heterogeneous subscriber landscape within the financial domain. This can lead to data inconsistencies, potentially resulting in misinterpretations of critical financial information.

Financial organizations using legacy point-to-point messaging architecture may encounter data integrity challenges during system updates. This can potentially lead to discrepancies in customer account balances.

Considering the limitations mentioned above, point-to-point messaging systems are feasible only for scenarios where message sender & receiver applications have a one-to-one relationship. This is more than impossible in today’s globally distributed always online organizations.

Event-Driven Architecture: Supercharging Modern Financial Systems

Event-Driven Architecture (EDA) fosters communication between loosely coupled services by utilizing asynchronous event streams. These event streams represent state transitions within the system, essentially serving as notifications of state changes.

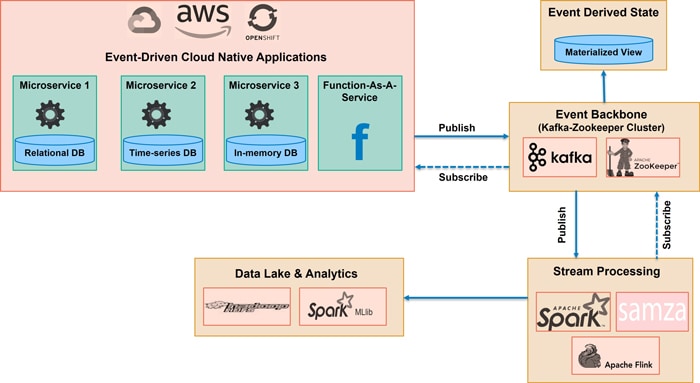

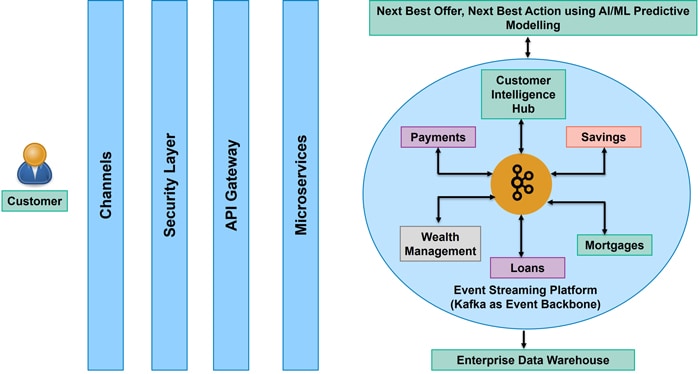

Figure 1 - Event Driven Microservices leveraging Data Streaming

In simpler terms, EDA decomposes a system into independent services that communicate via messages (events) that encapsulate state changes. These events trigger specific actions within subscribing services. This decoupled & asynchronous approach is a cornerstone of modern application development. Figure 1 depicts a typical event-driven architecture.

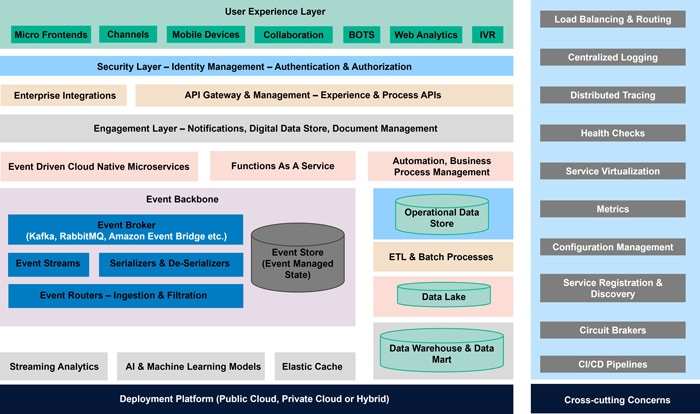

Within the realm of modern financial messaging architectures, Event-Driven Architecture (EDA) emerges as a pivotal component for facilitating real-time reactivity to critical financial events, transactions, & data updates. Figure 2 depicts an architecture blueprint for any software system based on EDA & event streams.

Figure 2 – Architecture Blueprint for Event Driven Architecture

Request/Response systems lack built-in message retry capabilities, whereas events can be retried using functionalities offered by event brokers. By leveraging the loose coupling between services facilitated by Event-Driven Architecture (EDA), the system exhibits enhanced agility & adaptability.

Furthermore, this decoupled integration fosters streamlined workflows by enabling versatile event routing, storage, & auditability through the event broker. Additionally, the inherent real-time & publish-subscribe paradigm of EDA obviates the requirement for continual polling mechanisms, thereby minimizing operational overhead & system intricacy.

In event-driven systems, there are two main patterns by which services can work together.

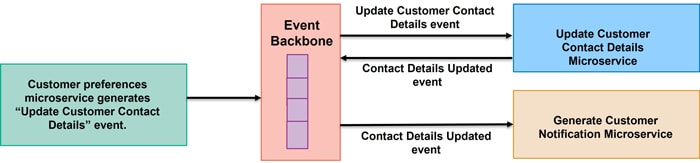

In a choreography pattern, services collaborate without a central coordinator. Each service subscribes to relevant events & triggers pre-programmed actions based on its designated role. This decentralized message exchange fosters loose coupling & scalability within the architecture.

Figure 3 – Choreography Topology (also called as Broker Topology)

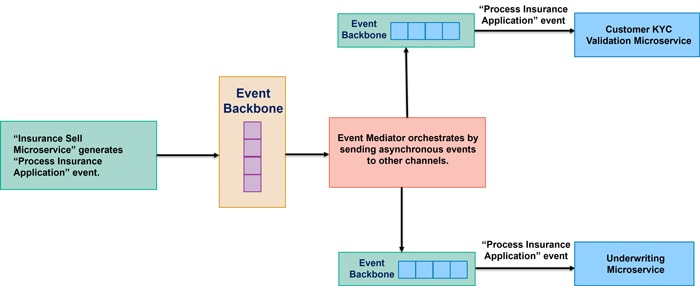

Orchestration, in contrast, introduces a central coordinator responsible for managing the sequence of event-driven actions. Functioning as the event message backbone, it guarantees a predetermined order for service invocations & data propagation, making it ideal for intricate workflows where independent services require a central entity to orchestrate a unified response.

Figure 4 – Orchestration Topology (also called as Mediator Topology)

Event-driven architectures (EDA) offer significant advantages like scalability, high availability, & robustness. However, they also introduce complexities such as increased codebase complexity, the need for specialized monitoring tools (e.g., Datadog, Prometheus), & additional infrastructure requirements for streaming platforms (e.g., Apache Kafka, Apache Spark Streaming, Apache Flink).

Evolution to Real time event Streams: Event first Financial Systems

Traditional financial data processing often relied on the transfer of voluminous flat files. These files, typically generated at the end of each business day, necessitated a subsequent processing stage. Consequently, decision-making based on prior day's data invariably incurred a one-day latency.

Organizational sustainability hinges on anticipating customer needs by delivering personalized content & proactively offering future-oriented services. Financial institutions (FIs) require even more robust & sophisticated systems due to the additional burden of regulatory compliance. This complexity further amplifies in the context of global operations.

To address this requirement, organizations leverage data integration techniques to aggregate information from diverse heterogeneous sources. This data undergoes real-time processing or storage, enabling the utilization of insights derived from it for more effective decision-making.

Real-time event stream processing (ESP) architectures empower financial institutions (FIs) to address a variety of critical use cases. These capabilities enable the continuous ingestion & analysis of data streams, allowing FIs to react to events & derive insights in real-time.

Machine learning (ML) & artificial intelligence (AI) are powerful tools that can be applied to analyze & extract insights from streaming data.

Example scenarios for FS organizations leveraging event streaming

Organizations cannot survive unless they remain ahead of their customers – by delivering personalized content at the most appropriate customer interaction touchpoints. It necessitates building real time insights into customer preferences & intelligent analytics to deliver outcome focused customer experience. An event driven platform as shown below can gather multiple facets of customer engagement at runtime, enrich them with segment & product-specific information & feed into predictive analytics model to drive customer engagement.

Figure 5 – Digital Engagement Hub with Customer 3600 view

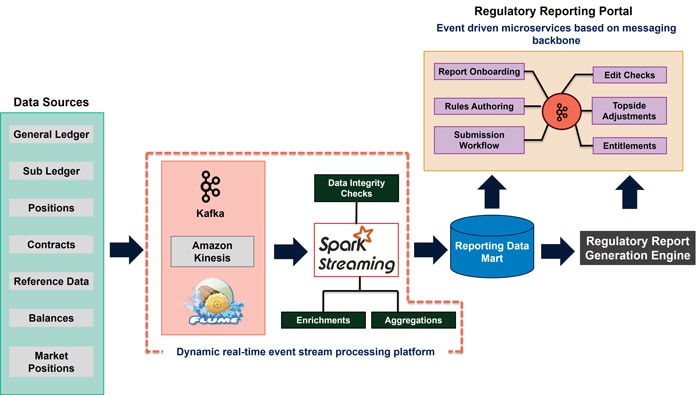

With ever increasing financial vigilance all over the world, financial institutions are always on their toes to keep their system of records up to date to meet regulatory submission timelines & stringent data integrity checks across multiple regulatory reports. Apart from the need to have a robust data foundation layer, financial institutions need an agility for onboarding new regulatory reports. Onboarding of regulatory reports needs co-ordination across various groups which are often disconnected. The group that onboards regulatory reports is often different from the group that creates rules to generate financial figures for those reports, report generation & submission are again handled by separate groups. This restricts the ability to respond efficiently to changes in reporting standards.

Event streaming based architecture acts as a central nervous system that glues together heterogeneous data sources, ensures highly integrated reporting data, & automates entire regulatory report generation & submission process as illustrated in the below diagram.

Figure 6 – Regulatory Reporting Platform leveraging Event Stream Processing

Solution Landscape for Event Stream Processing

Driven by the imperative to optimize operations, mitigate fraud, & deliver exceptional customer experiences, financial institutions (FIs) are relentlessly pursuing real-time data-driven insights. However, traditional messaging systems, often architected for lower data volumes & velocities, frequently falter under the deluge of high-frequency data streams characterizing the contemporary financial landscape.

Stream processing, a paradigm shift in financial data messaging, empowers real-time analytics, & expedites decision-making. This architectural approach boasts deployment flexibility, catering to diverse operational environments & accommodating your specific requirements.

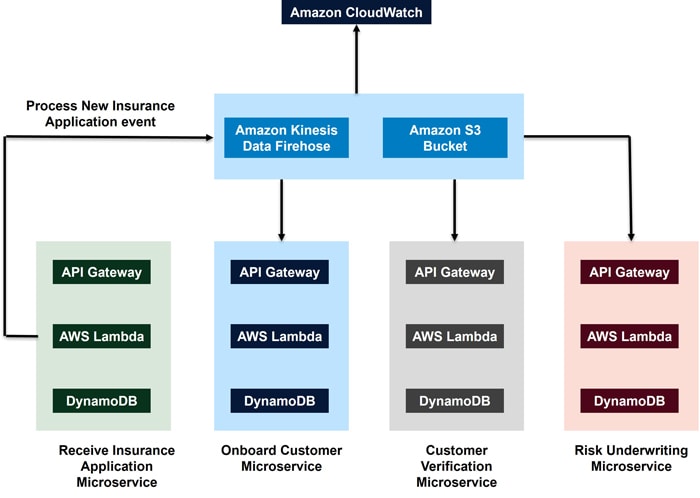

Here is a simplistic example of insurance application processing system that leverages serverless streaming data service – AWS Kinesis. As depicted in the diagram below, we have 4 microservices consisting of API gateway, AWS Lambda & AWS DynamoDB. “Receive Insurance Application Microservice” undergoes a state change when it receives a new application for insurance. It publishes an event to Amazon Kinesis Data Streams. Kinesis Data Streams application that runs into each of the four AWS Lambda handles message filtration, processing & forwarding. Amazon S3 Bucket acts as the persistence store for all events. Amazon CloudWatch dashboard can monitor aspects such as data buffering or ingestion, based on the metrics set for Kinesis Data Firehose.

Figure 7 – Event Driven Microservices with Streaming Data on Amazon AWS

Apache Flink is an open-source stream processing framework that can scale using both on-premises & cloud-hosted infrastructure. Cloud options, such as managed services from AWS Kinesis or Azure Stream Analytics, provide ease of use & seamless integration within their respective ecosystems. Hybrid deployments can combine on-premises Kafka with cloud-based processing, allowing data residency compliance while leveraging real-time cloud analytics.

Finally, multi-cloud stream processing platforms like Apache Pulsar offer the ability to seamlessly route data flows across multiple cloud providers. This flexibility caters to complex financial institutions with heterogeneous infrastructure needs.

Regardless of the chosen deployment environment, stream processing empowers financial institutions to unlock a new era of operational agility & efficiency.

Key considerations for well architected Event first systems

The migration from traditional message queues to stream processing in financial messaging systems represents a significant transformation. To ensure a smooth transition, overcome challenges, & optimize performance, careful consideration should be given to established best practices & practical recommendations.

The key considerations of well-architected event-driven financial systems, incorporating insights from financial regulations & best practices are as below.

Securing Data at Rest/Motion:

Encrypt sensitive financial data at rest using robust algorithms like AES-256. This safeguards data even if storage systems are compromised. Industry standards like PCI DSS for encryption should be considered. Strict access controls (IAM – Identity & Access Management) should be implemented to restrict access to financial data based on the principle of least privilege. Regularly review & audit access permissions. Employ techniques like digital signatures or message digests to ensure data integrity & detect tampering attempts.

For data in motion secure protocols like TLS/SSL for encrypted communication between event producers, brokers, & consumers should be used. This protects data from eavesdropping & man-in-the-middle attacks. Masking or tokenizing sensitive data in transit helps minimize exposure in case of breaches. Regulatory requirements regarding data masking should be taken care of.

Schema Governance (Schema Registry/Avro):

Implement a schema registry to store, manage, & enforce event schemas across producers & consumers. This ensures data consistency & reduces errors. Maintain version history for schemas to support backward compatibility & handle schema evolution gracefully. Validate event payloads against registered schemas to prevent invalid data from entering the system, improving data quality & reducing downstream issues.

Leverage Avro's schema definition language for defining the structure & expected data types of events. This promotes data clarity & promotes interoperability. Avro's flexible schema evolution allows for changes without breaking consumers that rely on older schemas. This facilitates easier system updates & maintenance.

Exactly Once Event Delivery Semantics (Transaction Management):

Ensure events are delivered exactly once to their intended consumers. This is crucial to maintain data consistency & prevent duplicate or missing transactions in financial systems. Consider using 2PC or XA transactions, where appropriate, to ensure event delivery & state changes across multiple systems are atomic (all-or-nothing) in a distributed environment.

Design your event processing logic to be idempotent, meaning it can be safely replayed without causing unintended side effects. This mitigates potential issues related to retries or duplicate deliveries. Implement compensating transactions to undo any changes made in case of delivery failures, ensuring data integrity & financial correctness.

Log Compaction:

Log compaction involves archiving or deleting less-critical historical data from event streams to optimize storage space & improve query performance. Financial regulations may mandate data retention for specific periods. Ensure compliance by archiving logs according to regulatory retention timeframes. It maintains auditability by archiving logs in a tamper-proof manner & defining clear access controls for archived data. If one use archived logs for future analysis, consider archiving them in a format that is readily accessible for historical queries.

Monitoring/Health Check & Logging:

Monitor event producers, brokers, consumers, & infrastructure for health, errors, throughput, & latency. Implement metrics & dashboards to track system performance & identify potential issues. Set up alerts for critical events that indicate potential problems, allowing for timely intervention & troubleshooting. Aggregate logs from all components of your event-driven system for centralized analysis. Leverage tools to identify patterns, diagnose failures, & gain insights into system behavior.

Adhere to relevant financial regulations (e.g., PCI DSS, SOX, Dodd-Frank) when designing & implementing your event-driven architecture. This ensures data security, robust audit trails, & compliance with data retention requirements.

Conclusion: Fast forwarding financial world with Event Streams

The future of distributed messaging systems in financial services hinges on technological innovation, adaptability, & unwavering security. By embracing modern stream processing technologies that cater to these core principles, financial institutions can effectively navigate the ever-evolving landscape of real-time data processing, scalability demands, & ever-present security threats.

Open-source software landscape offers a powerful toolkit for building highly available, robust, & fault-tolerant applications in the financial sector. Two key technologies stand out: Apache Kafka & Apache Flink.

While open-source solutions like Kafka & Flink offer immense value, the next generation of financial applications demand advanced manageability, telemetry even greater scalability & fault tolerance. This is where fully managed, cloud-based event streaming platforms such as Azure Event Hubs, Amazon Kinesis or Google Cloud Pub/Sub assume a key role. Real-time stream analytics for actionable insights with a scalable, flexible & cost-efficient approach has been made possible with these cloud native event streaming services.

Subscribe

To keep yourself updated on the latest technology and industry trends subscribe to the Infosys Knowledge Institute's publications

Count me in!