Cloud

The CNCF Landscape

This document details key projects from the Cloud Native Computing Foundation (CNCF) landscape (https://landscape.cncf.io/) under the runtime and Wasm categories. We have considered only incubated or graduated projects for client programs and listed Sandbox projects separately as they may not be ready for production.

Insights

- All statements are current as of April 2023, but things may change as these projects are fast moving.

- The CNCF landscape lists open-source projects maintained by CNCF and partner companies.

- Open-source projects directly under CNCF have Apache License 2.0 and cover various areas in the cloud-native landscape.

The CNCF project categories (Table 1) move up the stack from runtime to observability and analysis, with intermediate layers build upon each other for a complete cloud native development and production environment.

Table 1: CNCF project categories

| Runtime | Allows containers to run on nodes, network with each other, use storage space from the node, etc. |

|---|---|

| Wasm | Contains WasmEdge, a Wasm runtime already adopted by Docker Desktop |

| Orchestration and management | Involves a heavyweight project Kubernetes and associated platform tooling, such as service meshes that allow platform set up |

| Provisioning | Allows administrators to set up and manage all components required in addition to Kubernetes to create a complete platform |

| App definition and development | Covers projects that allow for the deployment of applications and associated services on a cloud-native platform; used by developers to build apps |

| Platform | Alternative distribution of Kubernetes - currently contains k3s used by Rancher Desktop as a lightweight alternative to the full Kubernetes distribution |

| Serverless tooling | Allows workloads to scale down to zero when there is no load and opinionated frameworks such as Dapr and Knative to accelerate app development |

| Observability and analysis | Allows platform operations by covering logging, monitoring, tracing, profiling and dashboard creation |

Source: Infosys

The CNCF landscape is a valuable reference for end users to add capabilities to their platforms.

The following use cases address runtime and Wasm projects, comprising both CNCF and non-CNCF projects.

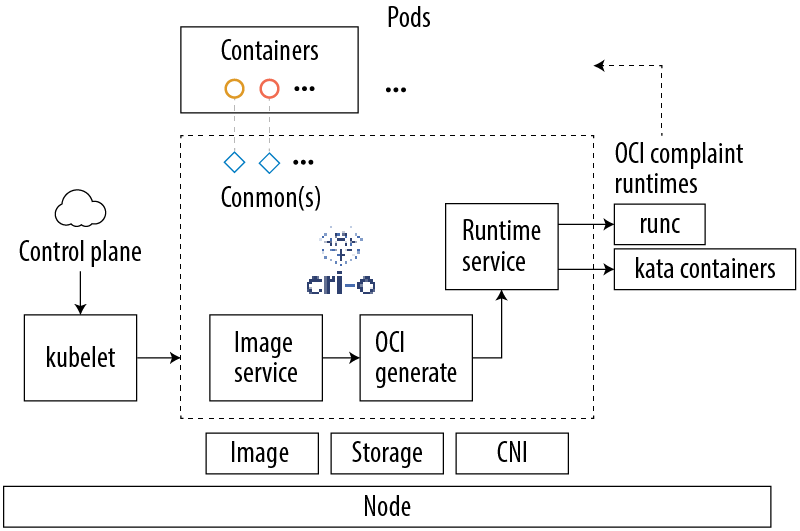

- Kubernetes v1.24 standardizes on cri-o and containerd for node runtime. They are OCI compliant, so any container image built on OCI specifications will continue to run on the platform.

- Docker produces OCI-compliant images.

- Teams working with Red Hat platforms such as OpenShift may adopt Podman.

- Teams can use other tooling such as buildpacks to build and run OCI-compliant images.

- Cloud and product vendors typically integrate storage and network-level operators as a part of their offerings.

- Platform teams can install and run storage operators such as Rook and Longhorn from open-source releases. However, this ismore relevant for advanced end users who want full control of their applications.

- For most end users, the storage options provided by the platform are recommended, both cloud and on-premises.

- Several exciting projects in the runtime sandbox stage can significantly impact cloud native platforms, such as Submariner for multi-cluster networking and WasmEdge runtime for Wasm containers.

This document also looks at the WasmEdge (sandbox) project that is part of the Wasm category and offers a Wasm container runtime. This project is already adopted by the Docker+Wasm Technical Preview product from Docker.

The cloud native glossary, an open-source repository where anyone can contribute, describes these concepts in detail.

About CNCF landscape runtime

The runtime category covers foundational technologies on which Kubernetes and other higher-level abstractions are built. It is divided into container runtime, container native network, and container native storage.

Given the foundational nature of these technologies, a technology stack will include both CNCF and non-CNCF projects. For example, a typical stack may utilize Docker (non-CNCF), Kubernetes (CNCF) with containerd (CNCF), and cri-o for container runtimes. In addition, both networking and storage have multiple proprietary and non-proprietary implementations, implying the runtime of any cloud native program at this stage will comprise a mix of CNCF and non-CNCF projects.

Different implementations of Kubernetes can play well together as a part of a technology stack if they are compliant with common standards, such as container network interface (CNCI, CNCF), open container initiative (OCI, non-CNCF), and container storage interface (CSI, non-CNCF).

Container runtime

Docker (non-CNCF) is the most popular tool to build container images, both locally and in the pipeline. Teams using Red Hat tooling look at Podman (Red Hat sponsored and open-source) as a drop-in replacement for Docker. Given most image builds are done via pipeline, we can also utilize a build image such as Kaniko to avoid creating a container with root permissions (required for Docker).

All the container tools above adhere to the OCI specifications. So, teams can use a different tool such as Docker to build containers and then run them on Kubernetes with cri-o as the container runtime.

Table 1: Container tooling

| Technology | Server | Clients | Comments |

|---|---|---|---|

| Docker | Docker daemon | Docker | It started the container revolutionand has a client server design with image build, run, caching, and additional features. |

| Podman | - | Podman | Designed to be a drop-in replacement for Docker that does not require root permissions. High compatibility (but not 100%) with the Docker command line. |

| Cri-o | cri-o | nerdctl, crictl, cri | As of Kubernetes v1.24, cri-o + containerd is the default lightweight container runtime for Kubernetes clusters. It is tightly coupled to Kubernetes and provides several features for running containers in Kubernetes. The clients listed are programs that can be used to interface with containerd. |

Both containerd (G) and cri-o (I) enable running workloads on Kubernetes nodes. They are lightweight and optimized to work with Kubernetes clusters. They are the defaults in Kubernetes starting v1.24 and conformant to OCI specifications.

Figure 1: Cri-o architecture

Containerd has several clients for different purposes (as shown in Table 1). The most user friendly is nerdctl for general use, while crictl and ctr are for debugging. Client libraries are also available for scripting.

Cloud native network

A microservice architecture requires a reliable and fully functional networking layer. This section of the CNCF landscape has projects catering to standards such as Container Network Interface (CNI) (I) and implementations such as Cilium (I).

The CNI project defines a standard to configure networking in containers. Kubernetes CNI plugins conform to this standard, but vendors may provide additional capabilities.

When setting up Kubernetes clusters on the cloud, use the well-tested and supported CNI plugin provided by the cloud vendor. For on-premises Kubernetes, use the default plugin supported by the vendor of the Kubernetes distribution you are using (e.g., Red Hat for OpenShift, SUSE for Rancher, VMWare for Tanzu Kubernetes Grid).

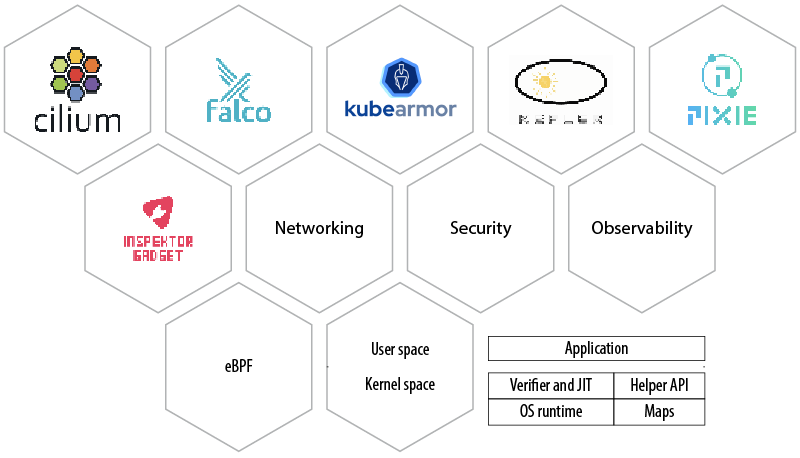

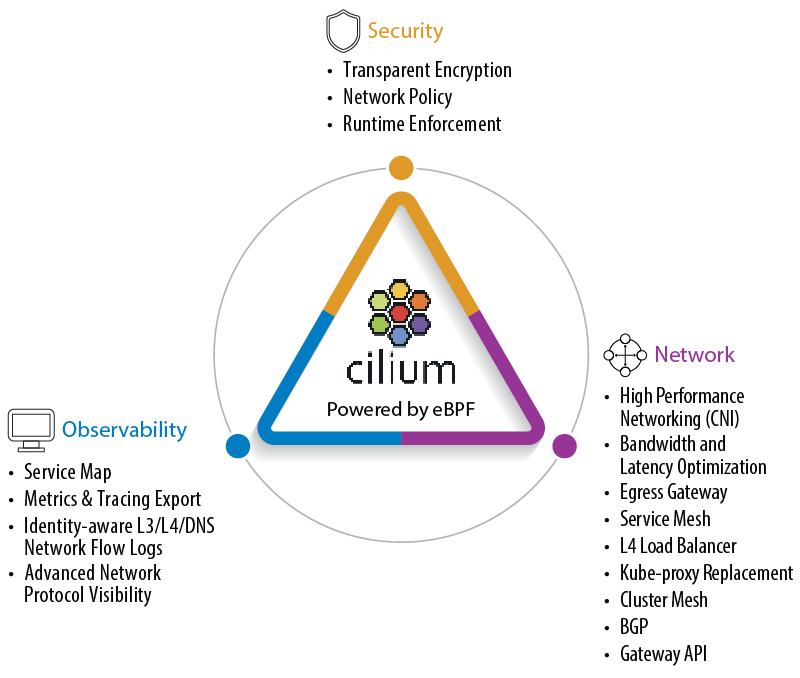

Cilium (I) is an eBPF-based CNI implementation that allows sandboxed programs to run in the Linux kernel with security precautions enabled. It is used for observability, networking, security, and other Kernel capabilities. Earlier, kernel capabilities were extended using root-required kernel modules with limitations.

Figure 2: eBPF use cases

Cilium is a large CNCF project that uses eBPF to provide a wide range of networking and security features for Kubernetes.

It offers a CNI plugin, network policies, observability, service mesh, security features, and a separate UI dashboard called Hubble. It was the first service mesh to run without sidecars, offering easy upgrades, reduced resource consumption, and other such benefits. Istio (I) is now developing a sidecar-less model, also known as Ambient Mesh.

Figure 3: Cilium capabilities

Cloud native storage

Kubernetes was originally designed for stateless workloads and non-critical stateful applications such as caches requiring less reliability. This is because Kubernetes concepts, such as ephemeral pods and unplanned reschedules, are not ideal for traditional databases. Kubernetes also lacked the ability to tune and access storage at an OS level, which is necessary for tuning traditional databases such as PostgreSQL.

Cloud native database offerings, such as Cockroach DB, have re-architected their products to deal with these limitations. Traditional database projects, such as PostgreSQL and MySQL, are also catching up by offering operators for horizontal scaling and the ability to run in Kubernetes clusters. This means that lower-level storage must also be able to scale and be reliable enough to support these stateful workloads.

CNCF projects Rook (G), Longhorn (I) and CubeFS (I) offer storage that works closely with Kubernetes and typically utilizes node storage for fast, reliable, redundant storage allocation, reclamation, and maintenance.

| CNCF project | File storage | Block store | Object store | Additional features |

|---|---|---|---|---|

| Rook | Yes | Yes | Yes | Uses Kubernetes operator for Ceph for underlying storage |

| Longhorn | No | Yes | No | Offers advanced features such as cross cluster replication |

| CubeFS | Yes | No | Yes | Offers both S3 compatible and HDFS APIs |

For any cluster running stateful workloads, selecting a cloud-native storage provider is critical. Network File System (NFS) is not recommended for production-level clusters, even if companies have good infrastructure, including backups and network optimization. The first recommendation is to use storage options integrated with the cloud or on-premises vendor, as they are well-supported and thoroughly tested with the platform.

While choosing an open-source storage provider for Kubernetes, choose one of the above options for better control and tuning.

Notable sandbox entries

This section explores sandbox projects with momentum or interesting concepts.

K8up (S) is in the same space as Velero (non-CNCF). It resides as an operator in Kubernetes and backs up storage from within a given namespace. This includes persistent volumes (PVs) with the data stored by applications running in that namespace. The underlying program is restic, a standard, battle-tested open-source tool for incremental backups and snapshots.

WasmEdge Runtime (S) is a high-performance, sandboxed runtime for WebAssembly programs. Wasm is a decade-old technology designed for browsers. However, the properties of a lightweight runtime for browsers, including security and performance, translate well to a server environment. Thus, Wasm is now leading a renaissance on the sever side with different languages such as Rust and Go that can be compiled to and run as Wasm.

WasmEdge runtime is a WASI (WebAssembly System Interface, non-CNCF) compliant engine and intended to run on the server side, including in resource-constrained edge devices. This project is already being used by customers as part of the Docker+Wasm technical preview.

Submariner (S) is a multicluster networking solution that allows multiple Kubernetes clusters to communicate with each other. It can help organizations isolate resources from different teams, but it can also lead to problems. For example, pods in one cluster may need to go through multiple network hops to talk to pods in another cluster. The flat network space created by Submariner, where pods from different clusters can communicate directly, resolves this issue.

Recommendations and best practices

As a part of the runtime layer, projects are using the options below to create container images. While Kubernetes has standardized on containerd + cri-o, any OCI-compliant image is compatible with this runtime. Projects have many options to go from code to a built container image.

- Docker (non-CNCF) is still popular for building images, usually with Dockerfiles. This option comes with the most collective knowledge and documentation from the community.

- Podman (non-CNCF) and buildah (non-CNCF) are programs that together achieve all the Docker does, but with more security safeguards.

- Workloads running on Red Hat OpenShift may use patterns such as S2I to build container images from code.

- Kaniko (non-CNCF) builds container images on Kubernetes without root access and can be used as part of a CICD pipeline.

- Cloud Native buildpacks, a CNCF incubating project, is another solution to convert code to container images and is based on the buildpacks concept introduced first by Cloud Foundry.

- ODO (non-CNCF) offers more customization than buildpacks and other features such as hot code loading into images without restarts. It is a great developer tool and productivity boost, supported by OpenShift.

- The final tool we will look at for creating container images is tilt (non-CNCF), which uses a dialect of python called Starlark to create “Tiltfiles” that describe how your project’s containers are to be built and deployed. This tool brings features such as continuous compile and code update without restarting images like buildpacks and odo.

Many tools can convert code to OCI-compliant container images. Tools, such as tilt, exponentially boost overall productivity and are worth exploring.

Other CNCF projects in this layer are best used through cloud and product vendors. For example, Longhorn (I) is offered as a packaged product with the Rancher Kubernetes distribution.

Contributing to CNCF projects

All CNCF projects are open source under Apache License 2.0 and hosted on GitHub. Every project has a GitHub repository with the README file providing instructions to start using the project, to start developing for the project, and contributing.

While Kubernetes is a large project with a stable governance structure and detailed steps for contribution, things may differ slightly in terms of structure and steps for other CNCF projects. Read, explore, and gain sufficient knowledge to become comfortable working with the build and codebase of your chosen project. Many projects assume a Linux or Linux-adjacent environment such as Mac or Windows Subsystem for Linux (WSL) and use tooling that works well on a Linux-like environment.

- In most CNCF projects, there is a list of issues labeled good first issue that are good for beginners contributing to the project to pick up – read through the issues before picking one to make sure another person is not working on it already.

- Another good first issue is to improve the documentation. Before making any changes, create an issue and ensure a project maintainer is aligned to it.

The first issue will take the longest, but subsequent issues will gradually become easier.

Get to know the people involved in the project and attend weekly technical committee meetings. The more you know about and interact with other technical experts, the more you benefit.

The predominant development language for CNCF hosted Incubating, Graduated projects is Go, and other languages are Rust, C++, Typescript, Shell script and Python. Refer to the below table for languages by project.

| Language/ Framework | CNCF Projects (I, G) |

|---|---|

| Go | Helm, Operator Framework, KubeVirt, Buildpacks, Argo, Flux, Keptn, Vitess, NATS, Chaos Mesh, Prometheus, Thanos, Cortex, OpenMetrics, Jaeger, etcd, CoreDNS, Kubernetes, Crossplane, Volcano, Linkerd, Istio, KubeEdge, Harbor, Dragonfly, SPIRE, Open Policy Agent (OPA), cert-manager, Kyverno, Notary, Cilium, Container Network Interface (CNI), Rook, containerd, cri-o, Dapr, Keda, Knative |

| Typescript, Javascript | Backstage, Argo, Keptn, Vitess, Chaos Mesh, Litmus, Prometheus, Jaeger, gRPC, Linkerd, Envoy, Contour, Harbor, Open Policy Agent (OPA), CubeFS, Dapr |

| Python, Starlark | KubeVirt, Vitess, TiKV, CloudEvents, Chaos Mesh, OpenMetrics, Jaeger, Emissary-Ingress, etcd, gRPC, Kubernetes, Volcano, Istio, Envoy, Cloud Custodian, Harbor, Open Policy Agent (OPA), The Update Framework (TUF), cert-manager, Falco, Notary, in-toto, Cilium, Rook, Longhorn, CubeFS, Dapr |

| C++ | gRPC, Envoy, Open Policy Agent (OPA), Falco, CubeFS |

| Rust | TiKV, Linkerd, Envoy |

| Java, Kotlin | Vitess, Envoy, CubeFS, Dapr |

Most CNCF projects use shell scripts, makefiles, dockerfiles, and helm charts.

References

- https://glossary.cncf.io/tags/?all=true

- Open Container Initiative - Open Container Initiative (opencontainers.org)

- Container Storage Interface · GitHub

- SMI | A standard interface for service meshes on Kubernetes (smi-spec.io)

- https://cilium.io/

- https://www.cni.dev/

- https://rook.io/

- https://longhorn.io/

- https://cubefs.io/

- https://containerd.io/

- https://cri-o.io/

- Apache License, Version 2.0

- community/contributors/guide at main · kubernetes/community · GitHub

- https://restic.net/

- https://k8up.io/k8up/2.7/index.html

- https://velero.io/

- https://kubernetes.io/docs/concepts/storage/persistent-volumes/

- https://wasmedge.org/

- https://wasi.dev/

- https://www.Docker.com/blog/Docker-wasm-technical-preview/

- https://submariner.io/

- https://developers.redhat.com/articles/2023/02/15/build-smaller-container-images-using-s2i

- https://podman.io/

- https://buildah.io/

- https://github.com/GoogleContainerTools/kaniko

- https://buildpacks.io/

- https://odo.dev/

- https://tilt.dev/

- https://github.com/bazelbuild/starlark

Subscribe

To keep yourself updated on the latest technology and industry trends subscribe to the Infosys Knowledge Institute's publications

Count me in!