Artificial Intelligence

AI Solution Workflow for Relatable Business Requirements

This whitepaper explores the common AI workflow solution for majority of the business needs of any enterprise.

Insights

- As part of rapidly changing enterprises technology landscapes, common AI workflow solution have taken one of the prime spots to address drivers such as solution for different business needs.

- This white paper examines common AI workflow and provides reader with information on the process to make best algorithm/modeling and apply into the workflow solution.

Introduction

- A leading hotel expecting AI solution to reduce revenue loss due to multiple rooms cancelation.

- A telecom company expecting AI solution for identifying the customers who would possibly porting out.

- A sports club wants to increase their revenue by enrolling right customer with help of AI solution.

Fortunately, the AI workflow is common for providing solution to this kind of business needs. This helps developer make the best algorithm/modeling and apply into the workflow.

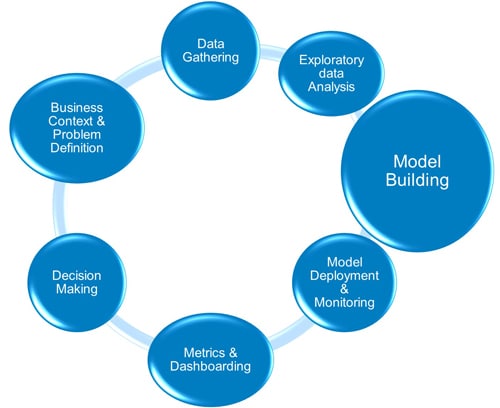

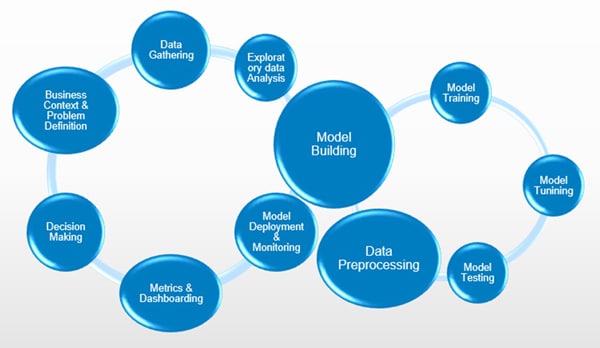

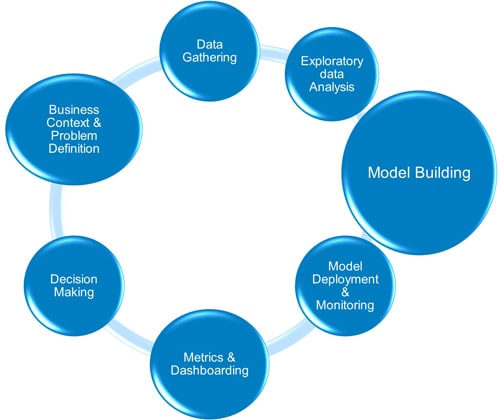

Common workflow

AI solution has multiple workflows that would repeat or reiterate at any stage, or the process starts with analyzing business requirements until the decision making.

Business Context and Problem Definition

Identifying business context and problem statement is a key aspect to determine the algorithm and modeling. Any modeling that deals with numbers, it is better to have all the current business information in numbers, such as revenue, transactions, etc., this would help identify the benefit of solution by postproduction.

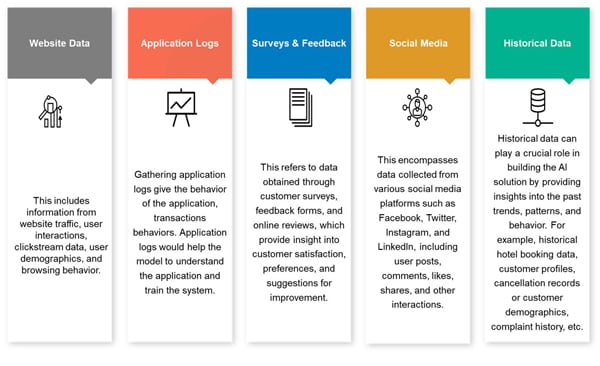

Data gathering

The data needed for building any AI solution is usually obtained from multiple sources depicted below.

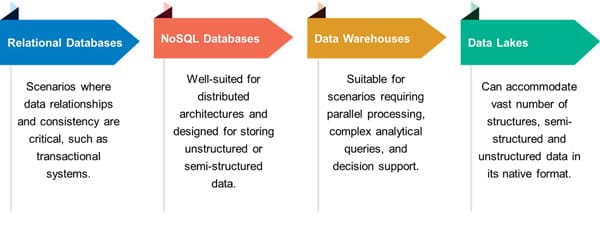

The data from different sources are collected and stored in an organized and secure manner in data stores. There are multiple ways that can be leveraged to store the data and the choice of storage option depends on purpose we are trying to resolve.

Some of the possible options but not limited to as listed below:

For the example scenarios mentioned in the abstract, a relational database is a good fit. Relational Databases are made up of tables, which are collections of data organized into rows and columns. The rows represent individual records, and the columns represent the different attributes that make up each record.

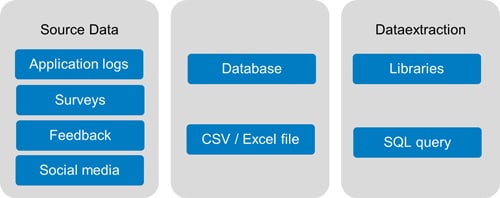

Once the data is stored in the databases, we can abstract the necessary data in multiple ways.

Export as structured format

This method allows exporting a selected subset or the entire dataset into processable format like a CSV (Comma-Separated Values) or Excel file format, which can be easily opened and analyzed using spreadsheet software or using programming languages like Python.

Querying from the database

This involves running queries using query languages like SQL on the database to retrieve specific data based on predefined conditions, allowing for more targeted and customized data extraction for analysis or reporting purposes. The SQL queries can be executed using programming languages like Python, C#, etc. by establishing a connection to the database.

The data can be extracted from any of the source medium, ex Application, Survey or social media and converted into structure data in database or CSV files. This structured information can be utilized using libraries or SQL queries for data processing.

Exploratory Data Analysis

Suppose you want create car's design; you decide to analyze the data you have collected. Exploratory Data Analysis (EDA) is like examining the car's components and understanding it’s characteristics. In this stage, you explore the data to identify trends, patterns, and potential issues. For example, you analyze the historical maintenance records, customer feedback, and vehicle performance data to gain insights into common problems and customer preferences. By understanding this information, you can make informed decisions on how to enhance the car's design.

Exploratory Data Analysis (EDA) plays a very important role in an end-to-end AI solution. It enables,

- Understanding the Data: EDA comprehensively reveals the dataset's structure, patterns, and issues. It assesses data quality, identifies missing values, outliers, and inconsistencies, vital for preprocessing and model development.

- Identifying Data Patterns and Insights: EDA discovers meaningful patterns, trends, and relationships through statistical techniques, visualizations, and summarization. It guides model development, hypothesis generation, and decision-making.

- Feature Selection and Engineering: EDA identifies relevant features by analyzing variable relationships, exploring correlations, and visualizing distributions. It improves model prediction by selecting informative features and creating new ones through feature engineering.

Model building

The AI model is the 'heart' of our AI solution. The model serves as the core component that brings intelligence and functionality to an end-to-end AI solution. It leverages learned patterns and insights to generate predictions or perform tasks, enabling organizations to make data-driven decisions, automate processes, and unlock valuable insights from their data.

The model building step of an AI solution can be further broken down into the sub-steps shown below.

Data Preprocessing

Now that you have a better understanding of the data, it's time to preprocess it to ensure it is in the right format for modeling. Data preprocessing is like preparing the car's components for assembly. In this stage, you clean the data by handling missing values, removing duplicates, and resolving inconsistencies. In the car example, you would need to start by gathering all the necessary parts. However, the parts might not all be the same size or shape. They might also be dirty or damaged. Before you can start building the car, you would need to clean and prepare the parts. This would involve removing any dirt or damage, and making sure that all the parts are the same size and shape.

Data preprocessing is a crucial step as it enables the following.

- Removing duplicate data: If your data contains duplicate records, it can skew your results. For example, if you are trying to calculate the average sales of a product, and your data includes duplicate sales records, the average will be artificially inflated.

- Correcting errors: If your data contains errors, it can lead to inaccurate results. For example, if your data includes a product with a price of 1000, but the correct price is 100, your analysis will be inaccurate.

- Filling in missing values: If your data contains missing values, it can make it difficult to analyze. For example, if you are trying to calculate the average age of a group of people, and your data includes missing ages, you will not be able to calculate an accurate average.

- Transforming the data: Sometimes, it is necessary to transform the data into a different format to make it easier to analyze. For example, if your data is in the form of text, you may need to convert it into numbers to perform statistical analysis.

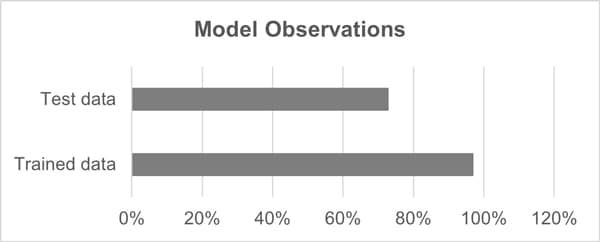

Additionally, we will encode the categorical variables using numerical values for any calculation. We will also divide the data into two parts - 70% of the data will be used for training purpose and the remaining 30% for testing purpose.

Model Training and Evaluation

During this stage, you gather data and create a model that can make decisions based on that data. In the car example, you would collect information on how to accelerate, brake, and turn based on different conditions such as speed limits, road conditions, and traffic signals. The goal is to create a model that can learn from this data and make accurate decisions.

Training an AI model is important because it allows machines to learn and perform tasks without explicit programming. It enables the following,

- Learning from Data: AI models, such as machine learning and deep learning algorithms, learn patterns and make predictions based on data. Through training, the model can identify underlying patterns, correlations, and relationships in the data, enabling it to make accurate predictions or perform specific tasks.

- Generalization and Adaptability: By training an AI model on diverse and representative data, it can learn generalizable patterns and rules that can be applied to new, unseen data. A well-trained model can adapt and make accurate predictions or decisions on new data points it hasn't encountered before.

- Optimization and Performance Improvement: During the training process, AI models adjust their internal parameters and weights to minimize errors or maximize performance on the training data. Training allows models to fine-tune their internal mechanisms to achieve the best possible performance on the given task.

Observations from Model Evaluation

If our AI model has a score of 99% on the train data and 76% on the test data respectively then the model works well on the data it is trained on, it fails to replicate the same performance on unseen data.

This poses a problem as the goal would be to make predictions for new reservations that have not come in yet, and we do not want a model that will fail to perform well on such unseen data.

Model Tuning

In this stage, you adjust the model parameters to improve its accuracy, efficiency, or other desired qualities. In the car example, you might tweak the engine, suspension, and other components to enhance its fuel efficiency, handling, or safety features. You wouldn't just fill up the tank with gas and go. You would adjust the tire pressure, check the oil level, and tune the engine. The same is true for machine learning models.

Model tuning is important for

- Optimizing Performance: Model tuning allows for finding the optimal configuration of hyper parameters to maximize the performance of the AI model. By systematically adjusting the hyper parameters, such as learning rate, regularization strength, or tree depth, it is possible to find the combination that yields the best results, improving the model's accuracy, precision, recall, or other performance metrics.

- Determining the right fit: Model tuning helps in finding the right set of model parameters that would yield the best results. By tuning the model, it is possible to strike a balance and achieve an optimal level of complexity that ensures that the model neither fails to capture the underlying patterns in the data nor learns the training data too well but fails to generalize to new data.

- Adapting to Data Characteristics: Model tuning allows for adapting the model to the specific characteristics of the data at hand. Different datasets may require different hyper parameter settings to achieve the best performance. By tuning the model, it becomes possible to adapt to data variations, handle different data distributions, or account for specific data properties, ultimately improving the model's ability to generalize and make accurate predictions.

Model Testing

In this stage, you simulate different scenarios and evaluate how well the model responds. For the car example, you would assess how the car handles various driving conditions, such as highways, urban roads, and off-road terrains. Testing helps identify any issues or weaknesses in the model that need to be addressed.

Model testing is crucial for

- Validating model performance: Testing helps assess how well the model performs under various conditions and scenarios.

- Identifying and mitigating errors or flaws: Testing helps uncover any errors, bugs, or weaknesses in the model.

- Assessing model robustness and generalizability: Testing helps evaluate the model's performance on new, unseen data.

- Building user trust and confidence: Model testing instills trust in the model's capabilities and predictions.

Model Deployment and Monitoring

Once the car has been built and tested, it's time to put it into action. Model deployment is like launching a car for production and public use. In this stage, you expose the model and make it ready for making predictions and supporting decision-making.

Model deployment facilitates:

- Realizing Value: Model deployment is necessary to derive value from the developed AI solution. By deploying the model into a production environment, businesses can leverage its capabilities to automate processes, make predictions, provide recommendations, or generate insights. Model deployment allows organizations to put their AI solution into action and start reaping the benefits.

- Scalability and Efficiency: Deploying a model enables scalability and efficiency in handling large volumes of data and making predictions or processing requests in real time. The model can be integrated into existing systems and applications, allowing for seamless and automated decision-making at scale. Deployment ensures that the AI solution can handle the demands of the operational environment effectively.

- Continuous Learning and Improvement: Model deployment facilitates the collection of real-world data, which can be used to monitor model performance and gather feedback. By continuously monitoring the model's output and gathering user feedback, organizations can iteratively improve the model, enhance its accuracy, and adapt it to changing conditions. Deployment allows for ongoing learning and refinement of the AI solution.

- Business Impact and Decision Support: Deployed models provide valuable insights and predictions that support decision-making processes. By integrating AI into business operations, organizations can leverage the model's outputs to make informed decisions, optimize processes, improve customer experiences, and gain a competitive edge. Model deployment enables the practical utilization of AI for generating business impact.

Types of Model Deployment

There are generally two main modes of making predictions with a deployed AI model:

- Batch Prediction: In batch prediction mode, predictions are made on a batch of input data all at once. This mode is suitable when we have a large set of data that needs predictions in a batch process, such as running predictions on historical data or performing bulk predictions on a scheduled basis.

- Real-time (or Interactive) Prediction: In real-time or interactive prediction mode, predictions are made on individual data points in real-time as they arrive. This mode is suitable when you need immediate or on-demand predictions for new and incoming data.

The choice of prediction mode depends on the specific requirements and use case of the deployed AI model. Batch prediction is preferable when efficiency in processing large volumes of data is important, while real-time prediction is suitable for scenarios that require immediate or interactive responses to new data.

Metrics and Dash boarding

Businesses need to know how they are performing, where they are spending their money, and what their customers are doing. This information helps businesses to make better decisions. Metrics and dash-boarding are the tools that businesses use to track their performance. Metrics are the specific measurements that businesses track. Dashboards are the visual displays of metrics that help businesses to see how they are performing briefly. Metrics and dash-boarding are essential for businesses because they provide the information that businesses need to make better decisions briefly. By tracking their performance, businesses can identify areas where they are doing well and areas where they need to improve.

Here are some of the benefits of using metrics and dash-boarding:

- Improved decision-making: By tracking their performance, businesses can make better decisions about how to allocate their resources, target their marketing campaigns, and improve their customer service.

- Increased efficiency: By identifying areas where they are doing well and areas where they need to improve, businesses can become more efficient and productive.

- Increased visibility: Dashboards provide a visual display of metrics, which makes it easy for businesses to see how they are performing briefly.

- Improved communication: Dashboards can be used to communicate performance metrics to employees, managers, and stakeholders.

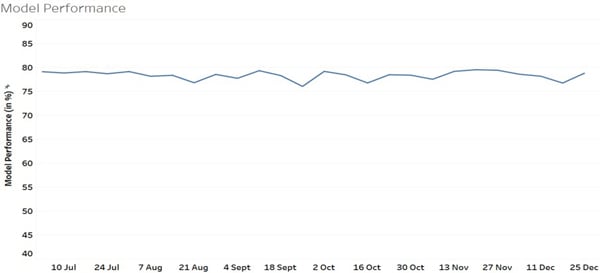

Sample dashboard for model performance

Decision Making

AI model helps to decide and determine the impact of implementation. The trends of model performance along with the business revenue metrics is useful for the Data Team. They can use it to

- Monitor the model's performance over time.

- Correlate it with financial numbers to gauge the business impact.

- Set thresholds for the acceptable lower limit of model performance.

- Decide when to retrain the model.

References

Disclaimers: Any AI solution should be adhered with accountability, reliability, safe and secure. These elements are not included in this document as this document describes about common workflow but solution. Also, the continues monitoring on postproduction would help to improve the solution over period.

Subscribe

To keep yourself updated on the latest technology and industry trends subscribe to the Infosys Knowledge Institute's publications

Count me in!